If you’re dipping your toes into the realm of deep learning, you’ve likely encountered three key terms: GPU or workstation and models for training. If you’re anything as I was at early on, the words could be daunting. However, by the time you finish this article, you’ll not only be able to comprehend the concepts but also know how to create your personal GPU workstation for deep learning (and the reasons why it’s worth it! ).

Why a GPU Workstation for Deep Learning

is a Game-Changer

Let’s start with a short tale.

A good friend of mine, Ali, tried to create a Convolutional Neural Network (CNN) for an assignment at school. He was using his normal laptop. The model took three hours just to get through one period! Dissatisfied, he nearly gave up. He then tried running the identical model on a GPU computer that I had installed earlier. And guess what? The same task was completed in less than 10 minutes.

This is the kind of speed increase we’re discussing!

An GPU (Graphics Processing Unit) is specially designed to handle the processing of parallel data. This means that it is able to handle the huge calculations needed for deep learning more efficiently than regular CPUs.

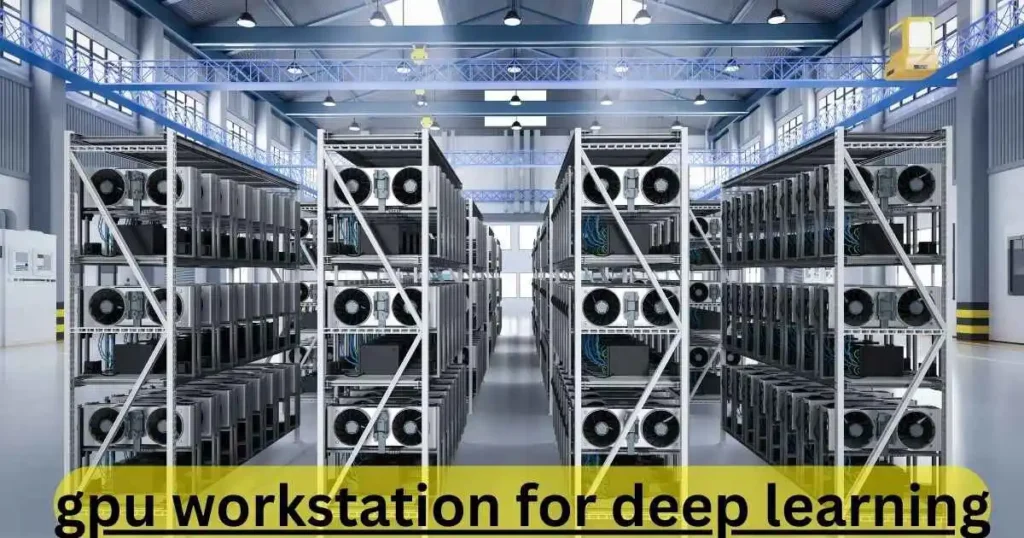

🛠️ What is a GPU Workstation for Deep Learning?

GPU Workstation for Deep Learning is a powerful computer specially designed to handle jobs that require intensive computations, like learning in machine training or deep-learning models.

Instead of relying solely on a processor, the workstations employ some or all of their powerful GPUs like the NVIDIA RTX series or the A100 series, which are specifically designed to perform matrix operations, which is the core of deep learning.

Are you interested in learning more about what a GPU is? Go there.

🧱 Key Components of a GPU Workstation for Deep Learning

If you’re considering purchasing or building a GPU workstation to use deep learning, here are the main parts you must know:

1. GPU (Graphics Card)

This is your most vital element. Check for:

- NVIDIA RTX 3090, A100, or Titan RTX

- 24GB in VRAM to train large models

2. CPU (Central Processing Unit)

Although GPUs are the ones who do most of the lifting for you, a robust CPU can boost overall performance. Aim for:

- Intel i9 or AMD Ryzen 9

- At minimum Eight cores

3. RAM

The process of deep learning consumes a significant amount of memory in processing. Recommended:

- Minimum 64GB RAM

- Do better if you plan to multitask

4. Storage

You’ll need speed and space.

- 1TB SSD NVMe for applications and OS

- Optional: HDD for dataset storage

5. Motherboard & Power Supply

Make sure they are compatible with the highest wattage GPUs and are equipped with sufficient PCIe slots.

🧰 Setting Up Your GPU Workstation (Step-by-Step)

Here’s a human-friendly, simplified rendition of the tech setup

Step 1: Install Ubuntu

Make use of Ubuntu as your OS; it’s reliable and widely supported by AI tools..

Step 2: Connect via SSH (Remote Access)

Make use of PuTTY to securely connect to your device if you’re creating it remotely.

Step 3: Install NVIDIA Drivers

Run:

sudo sh nvidia_driver.runMake sure you can verify that the graphics card is recognized.

nvidia-smiStep 4: Install CUDA Toolkit

CUDA allows your system to communicate with your GPU.

sudo sh cuda_10.runAdd CUDA pathways:

export PATH=$PATH:/usr/local/cuda/bin export CUDADIR=/usr/local/cudaStep 5: Install cuDNN

A GPU-accelerated library to support deep neural networks.

sudo dpkg -i cudnn_runtime.debStep 6: Install Anaconda

Make use of Anaconda to control Python settings.

sudo sh anaconda_installer.shInstall it the directory /opt/anaconda3 to gain access across the globe.

🧪 Testing Your Setup

Let’s check if everything’s functioning.

- Create a new conda environment:

conda create -n tf1 python=3.8 conda activate tf1- Install TensorFlow along with GPU assistance:

pip install the tensorflow GPU- Test Python to test

import tensorflow as tf print(tf.config.list_physical_devices('GPU'))If it mentions you as having a GPU, then congratulations! You’re now ready to train.

🌎 Why This Matters for Your AI Projects

Deep learning today, from Computer Vision to Natural Language Processing (NLP), requires significant performance. When you’re working on chatbots or detecting disease or constructing recommendations, using the GPU Workstation allows you to move faster and achieve more.

“Time is money,” as the old saying goes, and GPUs can help. GPU helps both.

💬 Real-Life Impact: A Success Story

Do you remember the name of my buddy Ali? When he switched to a GPU computer and a GPU-powered laptop, he not only finished his assignment earlier but was also able to add an object recognition system that was real-time into it. The project earned him an internship at a prestigious AI-based startup.

This is the power of appropriate tools.

💰 Should You Buy or Build?

You can purchase prebuilt solutions from:

You can also build your own if it is something you like making things. In either case, you need high-performance GPUs, fast SSDs, and plenty of RAM.

✅ Final Thoughts: Is It Worth It?

If you’re dedicated to the field of deep learning, then you’ll need a GPU-powered workstation. It isn’t just a luxury; it’s an absolute requirement. It helps save time, allows you to build larger models, and helps prepare you for real-world AI implementation.

By following this guide, you’re now armed to make an educated and confident choice, whether you’re a freelancer, student, or researcher.

Are you ready to boost your journey to AI? Make or purchase your GPU-powered workstation to accelerate your learning today.

When building a GPU workstation for deep learning, it’s important to follow best practices for training models so you can get faster results and make the most of your hardware.